Why Query Volume Matters in Measuring AI Recommendation Scores

The New Metrics of

AI-Driven Search

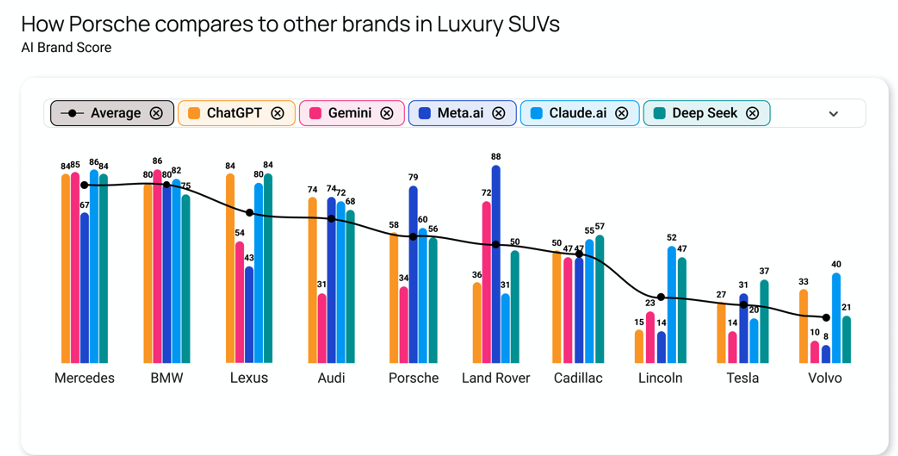

In this new era of AI-driven search, the way brands are discovered and recommended has fundamentally changed. Consumers are now guided by large language models (LLMs) like ChatGPT, Gemini, and Claude. In this new landscape, understanding your brand's visibility in prompt responses is a vital marketing signal.

At reegen.ai, a specialist GEO agency that optimizes brand visibility in the LLMs, we conducted a comparative analysis of emerging AI marketing tools and uncovered a striking divide: most platforms

fall into one of two camps based on how they measure brand recommendation.

Two Distinct Approaches to Measuring Recommendation Scores

Low-Volume, High-Frequency

These platforms send a single prompt daily and track whether or not a brand is mentioned. While it may sound consistent, the approach is statistically fragile. A single AI response can vary widely based on the temperature setting of the LLM, generating randomness in the consistency of the outputs.

High-Volume, Low-Frequency

The second approach sends thousands of prompts monthly to an LLM to simulate real-world variability. This method captures a broader and more reliable picture of brand visibility within AI models. The key idea: the more you ask, the more stable your insights.

What Is an AI Recommendation Score?

Probability Measurement

An AI Recommendation Score represents the probability that a brand will be mentioned by an AI model in response to a category-based prompt, unaided (without the brand being named in the query).

Practical Example

For example: If the model is asked "What is the best database software?" 100 times, and mentions Brand X in 52 of those answers, the AI Recommendation Score for Brand X is 52.

Statistical Validity

But how accurate is that score if you only asked once? Or even ten times?

Why Query Volume Is the Key to Accuracy

Large language models are non-deterministic—they don’t give the same answer every time. This is what makes query volume essential. It’s not just a best practice—it’s a statistical necessity.

Let’s put it into perspective using real-world experiment structure:

1 Prompt: You’re firing one arrow, and expecting that all others will hit the same spot.

10 Prompts: Still shaky—results can swing widely.

50 Prompts: Patterns start emerging.

100+ Prompts: You've finally achieve statistical significance.

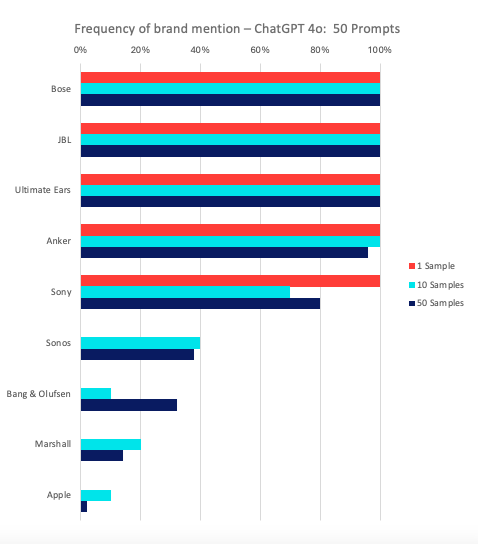

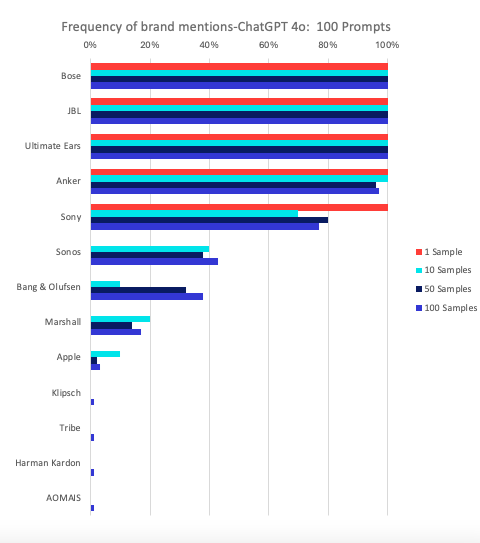

The Diagrams: How AI Recommendation Scores Vary by Prompt Volume

In our analysis*, we visualized how Recommendation Scores evolve when a model is prompted:

Just once “What are the best portable speaker brand?”

Then 10 times “What are the best portable speaker brand?”

Then 50 times “What are the best portable speaker brand?”

And finally, 100 times “What are the best portable speaker brand?”

These diagrams demonstrate just how inconsistent AI outputs can be at lower volumes—and how stable, meaningful insights emerge only at scale.

For example:

After 1 prompt, five brands (Bose, JBL, Ultimate Ears, Anker and Sony) appear, with no visibility for any other brand.

At 10 prompts, we can see Sony recommendation probabilities are dropping.

By 50 prompts, the rankings shuffle again.

But at 100 prompts, a consistent hierarchy stabilizes—offering a clear signal marketers can trust.

This fluctuation proves a critical point: no single prompt should be treated as truth. To understand how AI sees your brand, you must look at the aggregated trend over a statistically significant number of responses.

*Source: Proprietary insights and data analysis by Evertune

What This Means for Marketers

Relying on daily one-off prompts is like polling one person and claiming you’ve captured public opinion. It feels precise, but tells you nothing actionable.

Instead, marketers should:

Conclusion: Measure with Rigor, Not Hunches

The AI Recommendation Score is becoming a foundational brand signal in the AI age—but only if measured correctly. High-volume prompting gives marketers an honest view of brand visibility in the minds of AI.

In this new ecosystem, volume isn’t noise—it’s clarity.